Why Google Almost Killed the Kubernetes Platform: An Origin Story

The Kubernetes platform stands as the world’s leading container orchestration system today, but few know how close it came to being canceled at Google. What’s now the backbone of modern cloud infrastructure was once considered an unnecessary experiment by several senior Google executives.

Table Of Content

- The Birth of Borg: Google’s Internal Container System

- How Google managed massive workloads

- Key engineers behind the system

- Limitations that sparked new thinking

- From Borg to Omega: The Evolution Continues

- Why Google needed a new system

- Technical improvements in Omega

- The Kubernetes Project: A Risky Experiment

- The founding team’s vision

- Early technical challenges

- Building the first prototype

- Internal Resistance: Why Google Almost Pulled the Plug

- Competing priorities within Google Cloud

- Resource allocation battles

- The business case against Kubernetes

- The Turning Point: How Kubernetes Survived

- Key advocates who saved the project

- Critical technical breakthroughs

- The decision to open source

- Conclusion

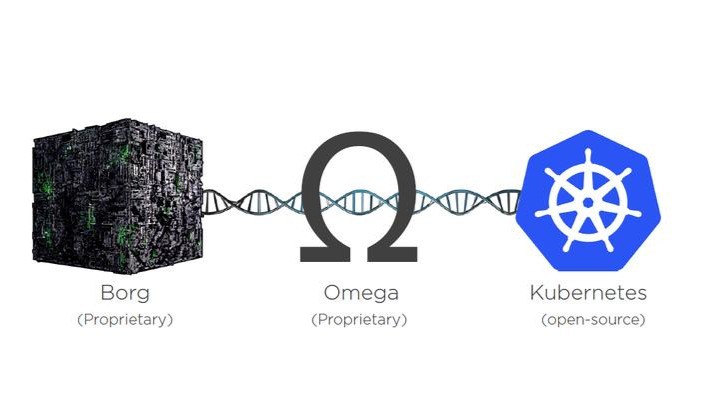

This remarkable story traces Kubernetes’ journey from Google’s internal container management systems to its near-death experience and eventual triumph. Starting with the Borg system that powered Google’s massive infrastructure, through the Omega experiment, and finally to the birth of Kubernetes, we’ll explore how a small team of determined engineers fought to keep their vision alive.

The Birth of Borg: Google’s Internal Container System

Long before the kubernetes platform became an industry standard, Google was silently perfecting the art of container management through an internal system called Borg. As early as 2008, Google had already been running containerized workloads for production services, developing expertise that would later revolutionize how the industry approached orchestration.

How Google managed massive workloads

Borg emerged from Google’s need to efficiently manage its rapidly expanding infrastructure. Initially, Google used two separate systems: Babysitter for long-running services like Gmail and Google Search, and Global Work Queue for batch processing jobs. However, as demands grew, the company unified these approaches into a single cluster management system.

At its core, Borg served as a sophisticated container orchestration system that ran hundreds of thousands of jobs across clusters with up to tens of thousands of machines. This massive scale allowed Google to efficiently power its search engine, YouTube, Gmail, and other services while maintaining extraordinarily high utilization rates.

The architecture of Borg featured several key components working in harmony:

- Borgmaster: A centralized controller responsible for maintaining cluster state, scheduling, and communication with worker nodes

- Borglet: Agents running on each machine that monitored tasks and reported resource utilization

- Scheduler: A sophisticated system that ranked jobs by priority and allocated resources accordingly

Borg’s ability to run both latency-sensitive user-facing applications and resource-hungry batch jobs on the same physical machines was perhaps its most valuable feature. This coexistence significantly improved resource utilization across Google’s data centers, essentially making batch jobs “free” by using the reserved but unused capacity of production services.

Key engineers behind the system

While the factual keypoints don’t mention specific individuals, Borg represented a collaborative effort across multiple Google engineering teams. Many of these engineers later became the founding team behind Kubernetes, bringing their hard-earned knowledge to the open-source world.

The team that developed Borg created not just a container management system but essentially a complete operating system for Google’s data centers. They pioneered approaches to resource isolation, scheduling, and fault tolerance that would eventually become standard practice throughout the industry.

Limitations that sparked new thinking

Despite its impressive capabilities, Borg wasn’t without limitations. As Google’s infrastructure expanded, engineers began identifying areas for improvement. The ecosystem around Borg gradually evolved into “a somewhat heterogeneous, ad-hoc collection of systems” that users had to navigate using several different configuration languages and processes.

Moreover, while powerful, Borg’s interface wasn’t particularly user-friendly. It required specialized knowledge and familiarity with internal tools and documentation. Its monolithic architecture, though efficient, made it challenging to extend or modify components independently.

These limitations prompted Google to experiment with a new system called Omega, designed to improve upon Borg’s software engineering. Omega introduced a more consistent, principled architecture while preserving Borg’s successful patterns. Eventually, many of Omega’s innovations were folded back into Borg.

The lessons learned from both Borg and Omega provided the foundation for what would later become the kubernetes platform. By 2015, Google was ready to take the wraps off Borg publicly, publishing details at the Eurosys academic computer systems conference. This disclosure coincided with Google’s push to bring container orchestration to the wider developer community through Kubernetes, an open-source project that would fundamentally change how applications are deployed across the cloud.

From Borg to Omega: The Evolution Continues

As Google’s computing needs expanded drastically in the early 2010s, even the mighty Borg system began to show its limitations. Around 2013, Google unveiled Omega, its second-generation cluster management system designed to address the scaling challenges that were becoming increasingly apparent in Borg’s architecture.

Why Google needed a new system

After nearly a decade of relying on Borg, Google engineers identified several critical limitations that threatened to hamper future growth. The monolithic architecture that had served Google well was becoming an obstacle to innovation and scalability. Internal assessments revealed that Borg’s design “restricts the rate at which new features can be deployed, decreases efficiency and utilization, and will eventually limit cluster growth”.

The need for rapid response to changing requirements proved difficult to meet with Borg’s monolithic scheduler architecture. This architectural constraint made it increasingly challenging for Google’s engineers to implement new policies and specialized capabilities without disrupting existing functionality.

Furthermore, Borg had evolved over the years into “a complicated, sophisticated system that is hard to change”. What once started as an elegant solution had gradually transformed into “a somewhat heterogeneous, ad-hoc collection of systems” that required specialized knowledge to navigate effectively.

Perhaps most compelling, Wilkes, a Google engineer, noted that with an improved system, Google could potentially save “the cost of building an extra data center”. Given the enormous expense of Google’s infrastructure, this efficiency gain represented a significant business opportunity.

Technical improvements in Omega

Omega represented a fundamental shift in Google’s approach to cluster management. Rather than using a single, centralized scheduling algorithm like Borg, Omega introduced a more flexible, scalable architecture with several groundbreaking features:

- Shared-state architecture: Omega stored cluster state in a centralized Paxos-based transaction-oriented store that different parts of the control plane could access concurrently

- Optimistic concurrency control: Multiple schedulers could operate on the same cluster state simultaneously, resolving conflicts through a transaction model

- Decoupled components: The functionality previously centralized in Borgmaster was broken into separate components that acted as peers, eliminating bottlenecks

This architectural approach allowed for greater flexibility and parallelism than had been possible with Borg. In contrast to both monolithic and two-level schedulers, Omega’s shared-state approach “immediately eliminates two issues – limited parallelism due to pessimistic concurrency control, and restricted visibility of resources”.

Omega additionally introduced a declarative approach to defining the desired state of the cluster, a concept that would later become fundamental to the kubernetes platform. This shift in methodology allowed for more sophisticated resource scheduling policies, including better handling of fairness, prioritization, and multi-tenancy scenarios.

Consequently, Omega achieved what Google engineers described as “performance competitive with or superior to other architectures”. The system’s innovative design proved so successful that “many of Omega’s innovations (including multiple schedulers) have since been folded into Borg”, rather than replacing it entirely as initially expected.

Notably, Omega’s influence extended beyond Google’s internal systems. When Google eventually created Kubernetes, they wanted to build something that “incorporated everything we had learned about container management at Google through the design and deployment of Borg and its successor, Omega”. This knowledge transfer ensured that the lessons learned from both systems would benefit the broader technology community through the kubernetes platform.

The Kubernetes Project: A Risky Experiment

In the fall of 2013, a small team of Google engineers began a side project that would ultimately transform cloud computing. The timing wasn’t random—Docker had recently emerged, popularizing Linux containers and making them accessible to developers everywhere. This convergence of technology and opportunity sparked what would become the kubernetes platform.

The founding team’s vision

Three Google engineers—Joe Beda, Brendan Burns, and Craig McLuckie—formed the initial core of the Kubernetes project. Working on Google Cloud infrastructure, they recognized something profound: Docker had fundamentally changed how developers approached application packaging and deployment.

“Were it not for Docker’s shifting of the cloud developer’s perspective, Kubernetes simply would not exist,” reflected Brendan Burns.

The team quickly realized that an open-source container orchestration system wasn’t just possible—it was inevitable. This insight motivated them to begin work on what they codenamed “Project Seven of Nine,” a Star Trek reference keeping with the Borg theme. They were soon joined by other key engineers, including Ville Aikas, Tim Hockin, Dawn Chen, Brian Grant, and Daniel Smith.

Their ambition? To build something that “incorporated everything we had learned about container management at Google through the design and deployment of Borg and its successor, Omega,” yet with “an elegant, simple and easy-to-use UI”.

Early technical challenges

The founding team faced numerous technical hurdles in developing what they called a “minimally viable orchestrator.” For starters, they needed to define exactly what constituted the essential feature set for such a system.

From their experience with Borg and Omega, they identified four critical capabilities:

- Replication to deploy multiple instances of an application

- Load balancing and service discovery to route traffic

- Basic health checking and repair to ensure self-healing

- Scheduling to group machines into a single pool and distribute work

Nevertheless, implementing these features presented significant challenges. The team had to build a system that could work across diverse infrastructure environments—unlike Borg, which was tightly coupled to Google’s internal systems.

Another major challenge was making the complex technology accessible. Brian Grant later admitted, “We didn’t set out to build a control plane just as a piece of infrastructure, we set out to build a container platform. The control plane was kind of an important design consideration, but it was not a primary focus”.

Building the first prototype

By late 2013, the team had developed the first prototype, and after three months of intensive work, they had something ready to share. This early version focused exclusively on the core orchestration features they had identified.

When Kubernetes was officially announced by Google on June 6, 2014, it contained precisely those minimal features. In the meantime, the team continued refining the platform based on feedback from early users and contributors.

Joe Beda later reflected that they should have approached the design differently: “I wish we had actually done what Brian (Grant) said we didn’t do, which is actually think about the control plane as a separable thing from the get-go”. This insight underscores how even the founding team couldn’t fully anticipate how the kubernetes platform would evolve.

After release, the open source community’s response was immediate and enthusiastic. For the next year, the team focused on “building, expanding, polishing and fixing this initial minimally viable orchestrator”. Their efforts culminated in the release of Kubernetes 1.0 at OSCON in July 2015.

What started as a risky experiment had begun its journey toward becoming the second largest open source project in the world after Linux. Yet as we’ll see, the project nearly didn’t make it that far.

Internal Resistance: Why Google Almost Pulled the Plug

Despite Kubernetes’ eventual success, the project faced significant internal resistance at Google that almost led to its cancelation. The experimental platform encountered skepticism from the very company that created it.

Competing priorities within Google Cloud

By 2012, Google Cloud already offered App Engine and Virtual Machines to customers. Many internal critics questioned, “Why do we need a third way to do computing?” This skepticism stemmed from Google’s existing investment in Borg, which already ran billions of containers weekly.

Equally important, Google’s engineering philosophy wasn’t naturally aligned with platform development. As one engineer noted, “Google isn’t a platform company. GCP is a nightmare. Culturally, the entire model of Google is a laissez-faire ‘we can tolerate a little bit of failure because our stuff is free’ attitude.” This cultural disconnect made supporting a reliable platform service challenging.

Resource allocation battles

The kubernetes platform team faced constant battles for resources within Google. Engineering talent and computing resources were perpetually stretched thin across competing priorities. The team struggled to secure funding and headcount while simultaneously demonstrating value.

The situation grew complicated because Google maintained two container systems simultaneously—Borg for internal services and Kubernetes for external use. As one former Borg SRE explained, “There is certainly a cost to maintaining two stacks, but as of now, it’s worth it.” Nevertheless, this dual approach required justification amid fierce internal competition for limited resources.

The business case against Kubernetes

The strongest argument against continuing the kubernetes platform centered on Google’s market position. AWS had established cloud dominance years earlier, creating powerful customer lock-in effects. Many executives doubted whether an open-source container platform could meaningfully improve Google’s competitive position.

Furthermore, the complexity of container orchestration raised concerns about adoption barriers. Critics pointed out that “projects of modernization and cultural improvement fail because technologies that provide incredible capability are often difficult to stand up and move forward with.” The business case hinged on whether the technical benefits would outweigh these adoption challenges.

The Turning Point: How Kubernetes Survived

The fate of the kubernetes platform hung in the balance around 2014, yet a combination of visionary leadership and strategic decisions ultimately rescued what would become computing’s most influential orchestration system.

Key advocates who saved the project

Joe Beda, Brendan Burns, and Craig McLuckie formed the passionate trio that championed Kubernetes internally at Google. Their conviction went beyond technical merit—they recognized Docker’s rising popularity had created perfect timing for container orchestration. Most importantly, Google’s CTO Urs Hölzle, despite initial reservations about releasing “one of our most competitive advantages,” ultimately acknowledged the strategic value of developing a scalable containerized platform.

Critical technical breakthroughs

The technical foundation that saved Kubernetes stemmed from lessons learned over a decade of Borg development. By combining Borg/Omega’s sophisticated orchestration capabilities with Docker’s approachable container format, the team created something truly groundbreaking. As developers worldwide contributed improvements, Kubernetes rapidly evolved from prototype to production-ready platform. Subsequently, this collaborative approach accelerated development far beyond what Google could accomplish internally, addressing complex challenges like stateful applications and multi-cloud deployments.

The decision to open source

Perhaps the most pivotal decision was making Kubernetes fully open source in 2014. This counterintuitive move served multiple strategic purposes:

- It enabled Google to set industry standards for cloud-native infrastructure

- It created a vibrant community of contributors, making the platform more innovative

- It positioned Google strategically against AWS in the cloud computing race

In 2015, Google donated Kubernetes to the newly formed Cloud Native Computing Foundation (CNCF), ensuring its vendor-neutral future. This donation included USD 9 million in Google Cloud credits to operate Kubernetes development infrastructure for three years. Thereafter, the project flourished—by 2018, Kubernetes had become CNCF’s first graduated project with over 700 actively contributing companies.

Handing control to CNCF proved crucial, as it allowed Kubernetes to be developed “in the best interest of its users without vendor lock-in”.

Conclusion

Kubernetes stands as a testament to the power of strategic vision and technical innovation. While Google executives nearly canceled the project, their decision to embrace open-source development transformed container orchestration. This bold move positioned Google as a cloud-native leader while giving developers worldwide access to battle-tested container management expertise.

Looking back, the kubernetes platform succeeded because it combined Borg’s sophisticated orchestration capabilities with Docker’s accessibility. Though maintaining parallel container systems created challenges, Google’s investment paid off through widespread adoption and industry standardization.

Today, Kubernetes powers countless organizations’ cloud infrastructure, validating the founding team’s original vision. Their determination to share Google’s container management expertise, rather than guard it, created something far more valuable than an internal system – a thriving ecosystem that continues driving cloud computing forward.

No Comment! Be the first one.